Activity Recognition with ML and IMU

For this lab we collected accelerometer data from Xiao nRF52840 Sense and trained a machine learning model.

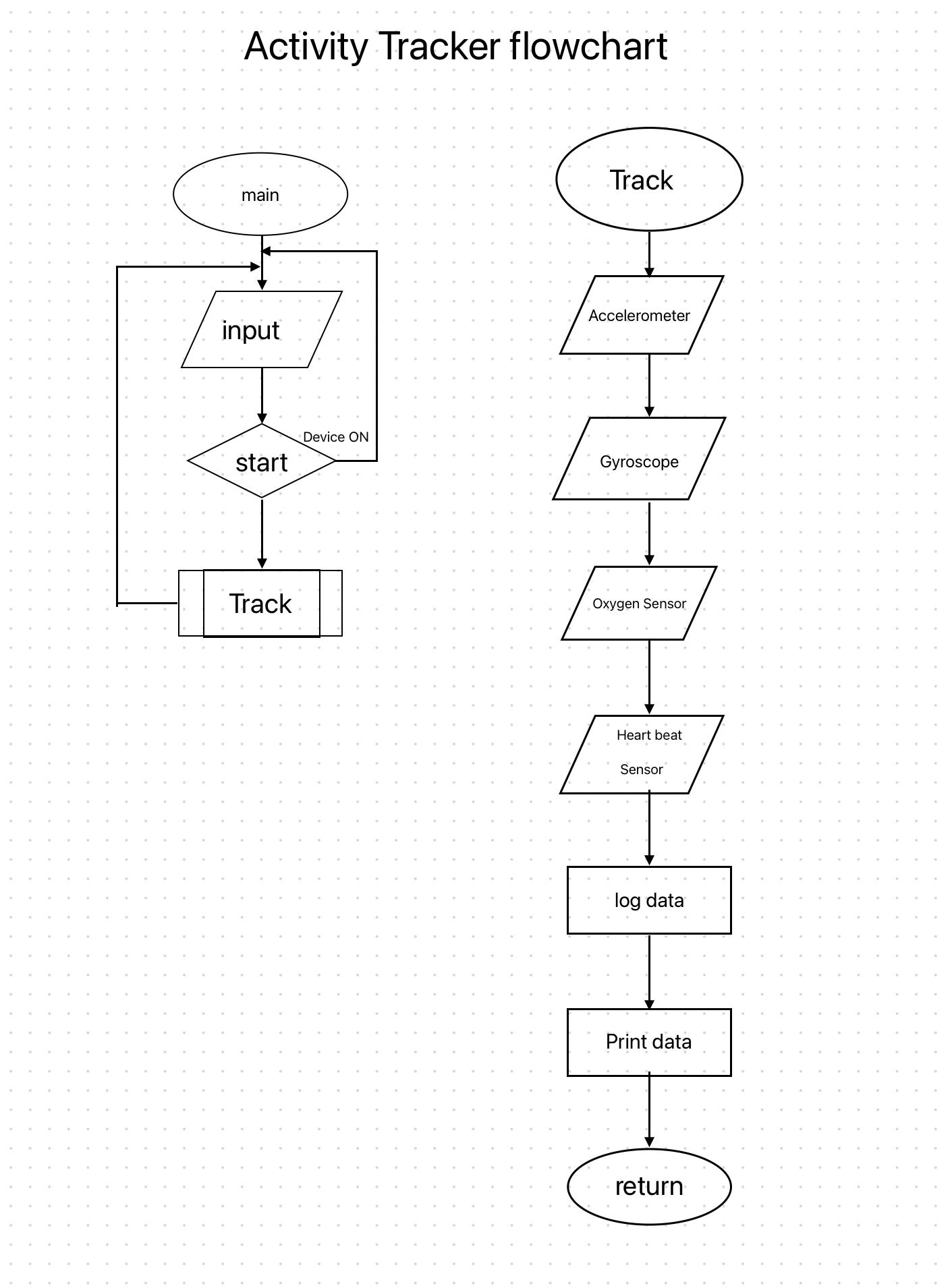

In the beginning of the class we created a flow chart of a activity tracker.

Trial Data Collection

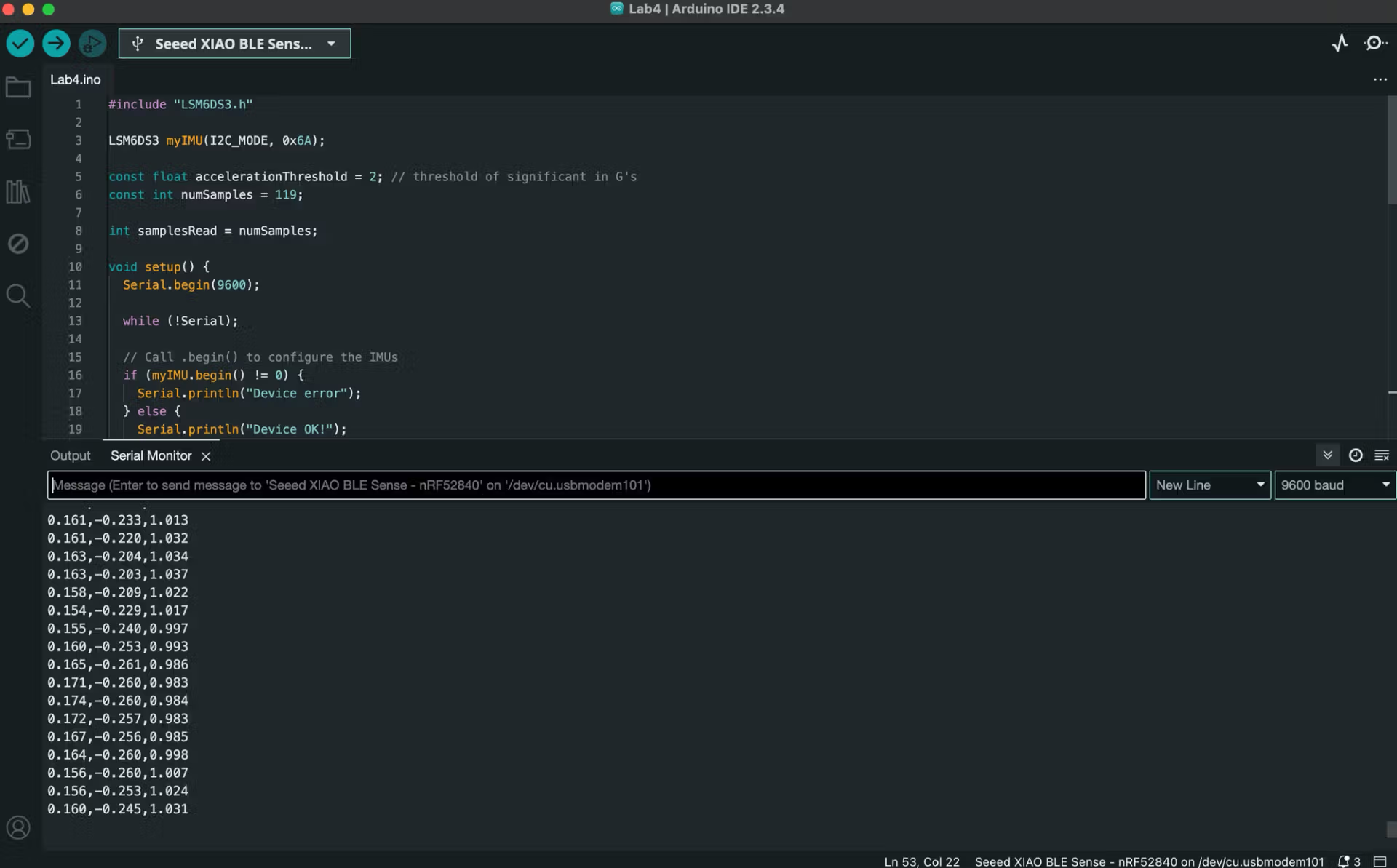

We use a simple Arduino program to stream accelerometer sensor data from the Seeed Xiao board. Below code was used for it:

#include "LSM6DS3.h"

LSM6DS3 myIMU(I2C_MODE, 0x6A);

const float accelerationThreshold = 2; // threshold of significant in G's

const int numSamples = 119;

int samplesRead = numSamples;

void setup() {

// put your setup code here, to run once:

Serial.begin(9600);

while (!Serial);

//Call .begin() to configure the IMUs

if (myIMU.begin() != 0) {

Serial.println("Device error");

} else {

Serial.println("Device OK!");

}

}

void loop() {

float x, y, z;

while (samplesRead == numSamples) {

x=myIMU.readFloatAccelX();

y=myIMU.readFloatAccelY();

z=myIMU.readFloatAccelZ();

float aSum = fabs(x) + fabs(y) + fabs(z);

// check if it's above the threshold

if (aSum >= accelerationThreshold) {

// reset the sample read count

samplesRead = 0;

break;

}

}

while (samplesRead < numSamples) {

x=myIMU.readFloatAccelX();

y=myIMU.readFloatAccelY();

z=myIMU.readFloatAccelZ();

samplesRead++;

Serial.print(x, 3);

Serial.print(',');

Serial.print(y, 3);

Serial.print(',');

Serial.print(z, 3);

Serial.println();

if (samplesRead == numSamples) {

// Serial.println();

}

}

}

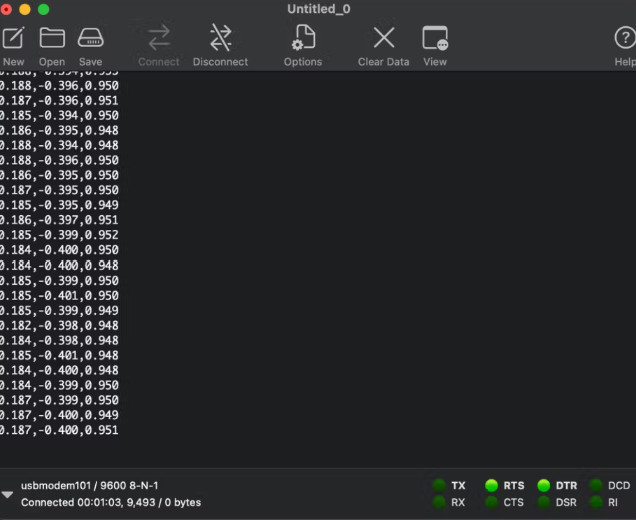

Capturing Gestures Training Data

We used CoolTerm software for data collection for gesture recognintion. Collected data was transferring to a Text/Binary file and later at the end it is saved csv file by adding “filename” + “.csv”.

Training Data in TensorFlow

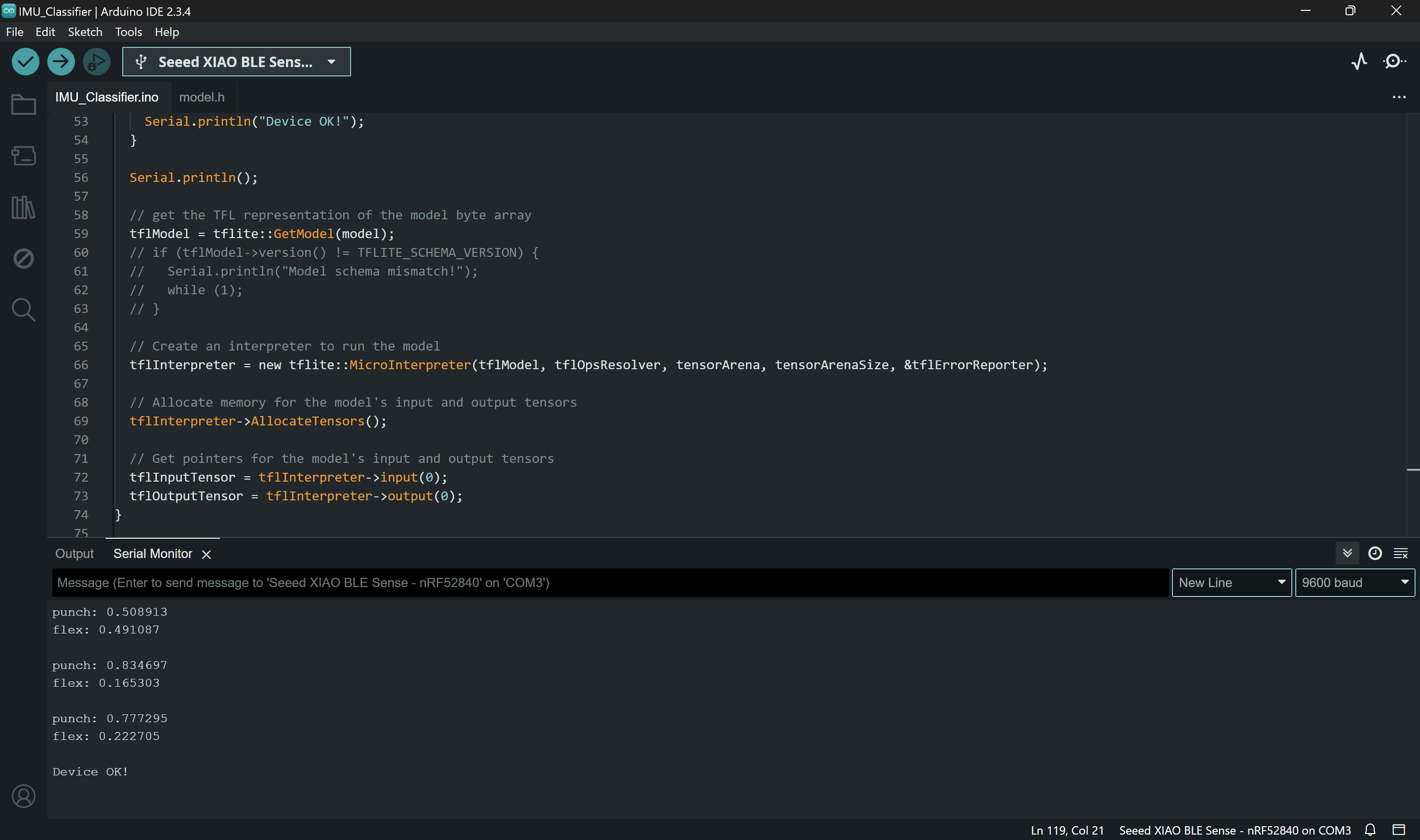

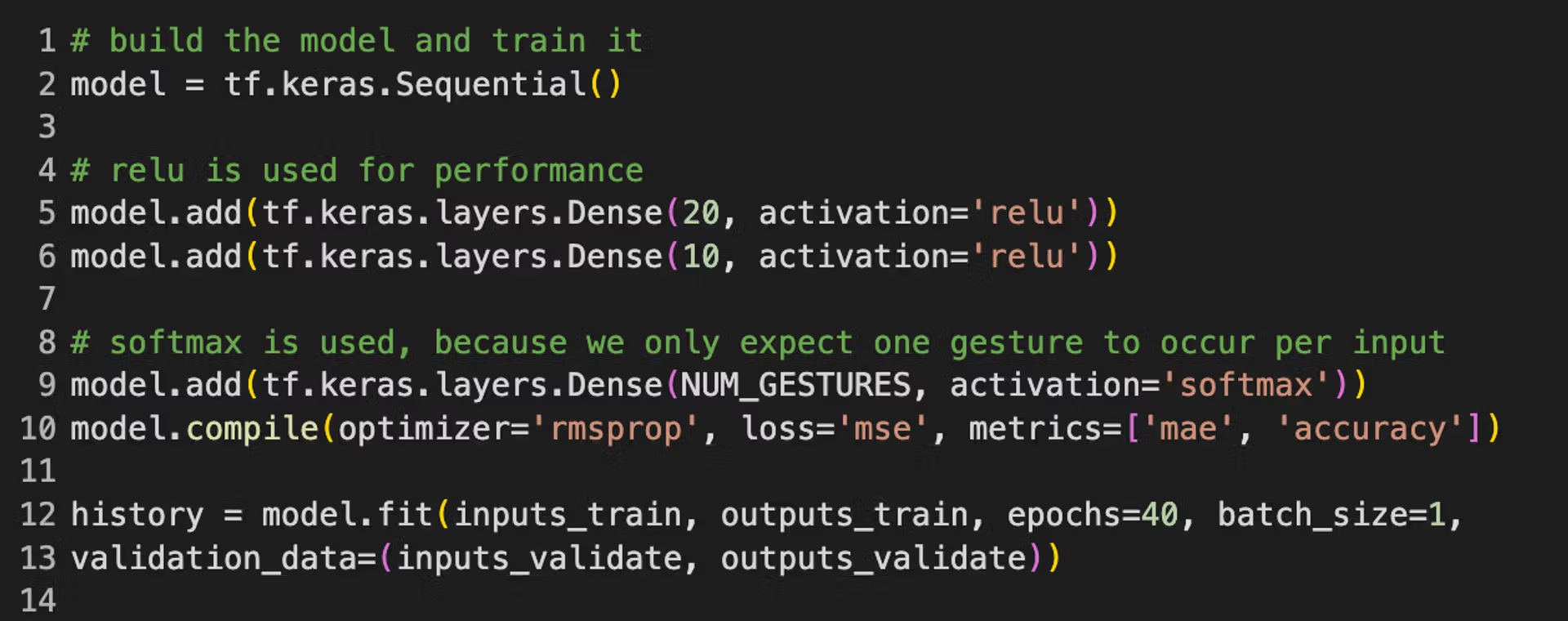

We trained our data from Google Colab Project and the “model.h” after finishing the training was uploaded to IMU Classifier in arduino IDE.

We achieved low mean absolute error (MAE) and loss scores by training for 40 epochs and using the other hyperparameters shown below.

and loss scores (right) during model training and validaion.png)

Model Testing

Our model was able to distinguish between the two gestures fairly well, although it seems sensitive to the rotation of the hand. So if the hand holding the microcontroller is rotated slightly during a flex gesture, it will tend to predict punch. Future work could involve fine-tuning the model to better distinguish between the different gestures.